Socratic Mind: Scaling Socratic Dialogue with Generative AI — and Its Measurable Impact on Learning

In higher education, few challenges are as enduring as assessing understanding — not just whether students can recall information, but whether they can reason, explain, and apply what they know. Traditional assessments often fall short of capturing that depth.

That's why we built Socratic Mind (SM), a GenAI-powered assessment tool that brings the spirit of Socratic dialogue into scalable, digital form — prompting students to explain, justify, and reflect on their reasoning rather than simply produce an answer.

Our new study, conducted with 173 undergraduate students in Georgia Tech's CS 1301 – Introduction to Computing, provides the first empirical evidence that AI-mediated Socratic questioning can measurably improve student engagement, learning, and higher-order thinking.

From Automation to Understanding

Generative AI has made it possible to administer oral assessments and deliver personalized feedback at scale. But most AI tools stop at the surface — testing what students remember, not what they understand.

Socratic Mind flips that script. Instead of evaluating correctness alone, it engages students in adaptive conversation. When a student answers, the AI responds with follow-up questions like "Why does this approach work?" or "What if we changed this condition?" until it detects conceptual clarity.

Behind each question is a simple idea: understanding is something you demonstrate through dialogue.

The Study

The study, "Socratic Mind: Impact of a Novel GenAI-Powered Assessment Tool on Student Learning and Higher-Order Thinking," was conducted by researchers from Georgia Tech and UC San Diego.

To evaluate how AI-driven Socratic questioning influences learning, researchers adopted a quasi-experimental, mixed-methods design in CS 1301: Introduction to Computing, a large online undergraduate course (n = 173). Students were randomly split into two comparable groups:

- •Unit 3 group: Used SM to prepare for Unit 3 assessments.

- • Unit 4 group: Used SM to prepare for Unit 4 assessments.

Quizzes consisted of multiple-choice and fill-in-the-blank questions, modeled after those found in the lessons, practice exercises, and problem sets. In contrast, tests required students to solve programming problems similar to those encountered during the course.

Key Findings

1. Real learning gains — especially for struggling students

As the course progressed from foundational to advanced programming topics, quiz scores naturally declined across both groups due to increasing material difficulty. When comparing unit-level performance, the Unit 3 group achieved slightly higher scores on their Unit 3 quiz and test compared to the Unit 4 group, though these differences were not statistically significant (Figure 1). Subsequent assessments and final course scores showed consistent performance between both groups.

Figure 1: Comparison of unit-level assessment results and final scores between the groups

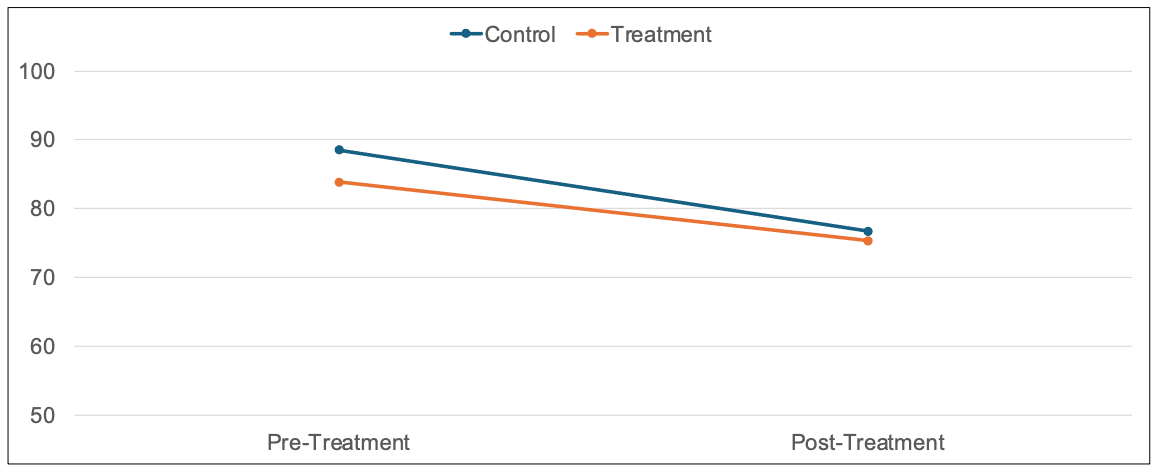

Using a difference-in-differences (DiD) approach with multiple regression analysis, we observed a significant positive interaction effect (β = .107, p < .05), indicating that engagement with the assessment tool mitigated this performance decline. While students overall experienced a decrease of approximately 11.84 points from pre- to post-intervention, those who participated in the AI-based assignment saw this drop reduced by an estimated 3.30 points (Figure 2). This buffering effect suggests that students who engaged with Socratic Mind demonstrated greater resilience to the increasing difficulty curve.

Figure 2: Buffering effect of the SM tool on quiz scores

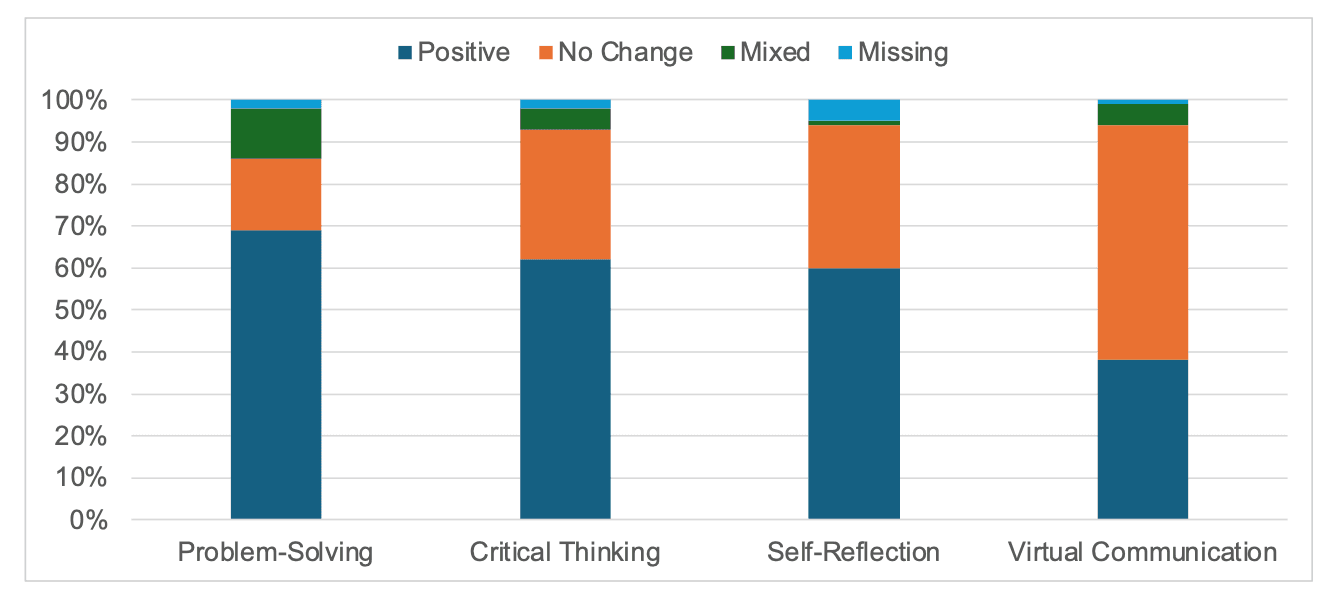

2. Students felt they learned more — and thought more deeply

Through thematic analysis of open-ended survey responses (n = 141), we examined students' perceived improvements across four higher-order thinking skills: problem-solving, critical thinking, self-reflection, and verbal communication. The majority of participants reported experiencing positive changes in problem-solving (69%), critical thinking (62%), and self-reflection (60%) (Figure 3). Students described developing more structured step-by-step reasoning, deeper conceptual understanding, and greater confidence in their approach to coding challenges. They frequently attributed these improvements to the tool's Socratic questioning method, which encouraged them to think more analytically, question assumptions, and articulate their reasoning more clearly. As one student noted, "The AI kept asking why until I could explain it clearly — and that's when I realized what I actually understood."

Figure 3: Comparison of participants' responses in percentage regarding their perceived influence of SM on their higher-order thinking skills

Why This Matters

AI in education is often viewed as a double-edged sword: powerful but potentially passive-making. Our research shows that, with the right design, AI can make students think harder, not less.

By embedding Socratic dialogue into the learning process, we can use GenAI not to replace instructors, but to amplify their pedagogical reach — turning every student interaction into an opportunity for reflection, reasoning, and growth.

Looking Ahead

This study offers a proof of concept for a new kind of AI-mediated assessment — one that prioritizes inquiry over instruction and understanding over answers.

As institutions continue integrating GenAI into curricula, tools like Socratic Mind can help ensure that automation doesn't dilute learning — it deepens it.

We're now collaborating with educators to expand Socratic Mind's applications across disciplines and explore long-term effects on critical thinking and communication skills.

In an era where information is abundant but understanding is scarce, the ability to explain your reasoning remains the highest form of intelligence.

This is a summary of the paper "Socratic Mind: Impact of a Novel GenAI-Powered Assessment Tool on Student Learning and Higher-Order Thinking," authored by Jeonghyun Lee, Jui-Tse Hung, Meryem Yilmaz Soylu, Diana Popescu, Christopher Zhang Cui, Gayane Grigoryan, David A. Joyner, Stephen W. Harmon. It was first uploaded as a preprint to arXiv in September 2025 and has not yet undergone peer review. Therefore, the findings and conclusions should be considered preliminary.

Acknowledgements

We thank all the students and faculty who participated in this research study and provided valuable feedback that made this work possible. This research was supported by Georgia Institute of Technology.